Natural Language

Preface

Prerequisites

Learning ethics

Introduction

What is X?

phonetic similarity, transliteration, nicknames, missing spaces or hyphens, titles and honorifics, truncated name components, missing name components, out-of-order name components, initials, names split inconsistently across database fields, same name in multiple languages, semantically similar names, and semantically similar names across language.

2

Named Entity Recognition

Why does X matter to you?

Research

Ecosystem

Standards, jobs, industry, roles, …

- El Iberlef es una campaña de evaluación compartida de los sistemas de procesamiento

del lenguaje natural en español y otras lenguas ibéricas.

- El CLEF consiste en una conferencia sobre una amplia gama de temas en PLN, y un conjunto de laboratorios y talleres diseñados para probar diferentes aspectos de los sistemas de recuperación de información.

- SEMEVAL es una serie de talleres internacionales de investigación de procesamiento del lenguaje natural (PNL) cuya misión es avanzar en el estado actual

de la técnica en el análisis semántico.

Gensim: https://radimrehurek.com/gensim/models/word2vec.html

Spacy: https://spacy.io/

NLTK: https://www.nltk.org/

spaCy

lucene /solr

elastic search

https://stanfordnlp.github.io/CoreNLP/

algolia

General Architecture for Text Engineering

Apache OpenNLP

https://explosion.ai/software#prodigy

Story

FAQ

Worked examples

Fundamental Algorithms

Formal approaches

Regex

Lexers and Parsers

Approximate string matching

https://www.youtube.com/watch?v=s0YSKiFdj8Q

Subsubsection

N-grams

Exercises

- Logic. Mathematics. Code. Automatic Verification such as Lean Proven or Frama-C.

- Languages in Anki.

Projects

Summary

FAQ

Reference Notes

Next steps

References

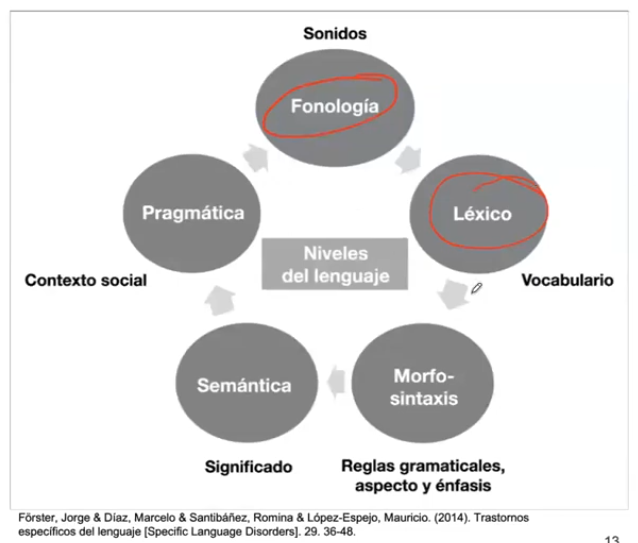

The dimensions of language

graph TD

Phonology --> Lexer

Lexer --> Parser

Parser --> Semantics

Semantics --> Pragmatic

Pragmatic --> Phonology

arquitectura seq2seq

Evaluation metrics

Parametrics

Speech recognition

Machine translation

References

Speech and Language Processing by Dan Jurafsky and James H. Martin

https://ipfs.io/ipfs/QmdVkKvX5JBSNTWjNBW5LdbPFTT8wLQb1vCJtUzXRppXbg?filename=nlp.pdf

Libro

- Speech and Language Processing: capítulo 6, secciones 3-8: https://web.stanford.edu/~jurafsky/slp3/6.pdf

Artículos

- Paper original de Word2Vec: Efficient Estimation of Word Representations in Vector Space

- Paper donde se discuten los sesgos que pueden existir en los embeddings: Man is to Computer Programmer as Woman is to Homemaker? Debiasing Word Embeddings

@inproceedings{bender-koller-2020-climbing,

title = "Climbing towards {NLU}: {On} Meaning, Form, and Understanding in the Age of Data",

author = "Bender, Emily M. and

Koller, Alexander",

booktitle = "Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics",

month = jul,

year = "2020",

address = "Online",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2020.acl-main.463",

doi = "10.18653/v1/2020.acl-main.463",

pages = "5185--5198",

abstract = "The success of the large neural language models on many NLP tasks is exciting. However, we find that these successes sometimes lead to hype in which these models are being described as {``}understanding{''} language or capturing {``}meaning{''}. In this position paper, we argue that a system trained only on form has a priori no way to learn meaning. In keeping with the ACL 2020 theme of {``}Taking Stock of Where We{'}ve Been and Where We{'}re Going{''}, we argue that a clear understanding of the distinction between form and meaning will help guide the field towards better science around natural language understanding.",

}

MACTI

https://www.youtube.com/watch?v=D_R3swIzLP0

https://www.youtube.com/watch?v=dCdGFHURx5g

https://www.youtube.com/watch?v=Z6GCalIGnSc

https://www.youtube.com/watch?v=ruiCm5SGtYg

https://www.youtube.com/watch?v=iu3XkI6-RvE

https://drive.google.com/drive/folders/1G6-5yUlqDAKHJX14sZ0L3MeZ6iA4LsHm?usp=sharing

Natural Language for Communication