Distributed Systems

Preface

Prerequisites

Learning ethics

Introduction

What is Distributed Computing?

A distributed computer system consists of multiple software components that runs concurrently but run as a single transparent system. Real distribution is achieveed when multiple nodes uses different processing units refers to CPU, GPU, and TPU via networking. However, we can apply algorithms, architechtures in local environments. What happen with async?

"Concurrency vs Parallelism" is a useless dichotomy especially in the world of coroutines, fibers, and intelligent schedulers.

There’s a crisp mathematical distinction. Concurrency means independent interacting computations. Parallelism means independent isolated computations. This is completely orthogonal to hardware/software boundaries. You can write this down in many process calculi. For example, in the rho calculus the process P1 | … | Pn is parallel if for all 1 <= i, j <= n FN( Pi ) \cap FN( Pj ) = \empty; and concurrent otherwise. It’s sad that so little of concurrency theory has penetrated into the communities that need it most.

https://twitter.com/leithaus/status/1699568865121104098

Single clock and scheduler

Multiprocessor Systems

Multi-core Processors

Graphics Processing Unit (GPU) Computing

Characteristics.

Transparency.

Global Scheduler.

Design. Interfaces. Open-close principle.

High-performance distributed computing.

Pervasive systems (Supranet)

Federated systems

Servless, Blokchain

Cloud

Descentralized

The model

A misconception is that distributed systems are microservices, indeed the latter is an implementation of the former.

Types of distribution transparency

Access

Location

Relocation

Migration

Replication

Concurrency

Failure.

Pitfalls

Types of distributed systems

Grid computing.

Cloud computing.

Cluster computing.

Distributed information systems

Enterprise Application Integration

Primitives.

ACID properties.

Pervasive systems

Mobile systems

Embedded Devices

Sensor Networks

Why does Distributed Computing matter to you?

If you have performance issues where the process is parallelizable, you have intensive IO operations, or you need a reliable system you must check out distributed computing.

- Data engineering

- Network applications (real-time editors, offline-first systems)

- Descentralized systems

Research

Ecosystem

Standards, jobs, industry, roles, …

Clouds

Hadoop

Docker

Story

Case of study

Google File System (GFS)

Summary

Code

@startuml !theme crt-amber class ComputerSystem class User package "Overlay networks" <<Cloud>> { class Node { location data status software process hardware device resources +process(message: Message) +send(message: Message) +receive(message: Message) } package Middleware <<Frame>> { class "Middleware" as MW { reliability networked environment communicate() transact() security(service) account(service) compose(service: Service) } package "Application 1" <<Node>> { class "Computer 1" { local operating system } } package "Application 2" <<Node>> { class "Computer 2" { local operating system } class "Computer 3" { local operating system } } package "Application 3" <<Node>> { class "Computer 4" { local operating system } } } } note left of Middleware: Operating system : Computer :: Middleware :: Distributed system class "Distributed system" as DS { trade-offs sufficiently are suffienclythe spread across network. false assumptions by Peter Deutsch, = [ the network is reliable, secure, homogenous, the topology does not change, latency is zero, bandwidth is infinite, transport cost is zero, there is one administrator ] distribution transparencies based on user needs and performance = [ {degree of grade: Float, type: 'Access'}, {degree of grade: Float, type: 'Location'}, {degree of grade: Float, type: 'Relocation'}, {degree of grade: Float, type: 'Migration'}, {degree of grade: Float, type: 'Replication'}, {degree of grade: Float, type: 'Replication'}, {degree of grade: Float, type: 'Concurrency'}, {degree of grade: Float, type: 'Failure'} ] exploit parallelism() } note right: A transparent system is Unix-like in which everything is a file class "Good Distributed system" as GoodDS { goals = [supporting resource sharing, being open, being scalable, flexibility] scalability dimensions = [size, geographical, administrative] // scalability means that X factor increases the amount of work the system keeps the same performance. opposed to a monolithic system i.e. microservices. hide(resource across a network) support(resource sharing for all kinds of groupware) separate policy from mechanism() offer(): Components based on Interface Definition Language //openess = completeness+ neutrality+interoperability+composability+etensibility scaling up() scaling out(technique: Hiding communication latencies and bandwidth restrictions | Partitioning and distribution | Replication ) span multiple administrative domains() } class DistributedInformationSystems { stable wealth of networked applications difficult interoperability entrprise-wide information } class Transaction { types = ["system call", "library procedure", "language"] atomic consistent isolated durable begin() end() abort() read() write() call() //follow logical divisions } Transaction -->"parallel" Transaction Database -->"old days, request/reply behavior" TransactionProcessingMonitor class Database DistributedInformationSystems <|--"tp monitor" Database DistributedInformationSystems <|--"exchange information directly" EnterpriseApplicationIntegration EnterpriseApplicationIntegration -->"coupling" RemoteProcedureCall EnterpriseApplicationIntegration -->"coupling" RemoteMethodInvocation EnterpriseApplicationIntegration -->"publish/subscribe" MessageOrientedMiddleware MessageOrientedMiddleware ..|> RSS DistributedInformationSystems *-- Server Clients -->"distributed transactions" Server class PervasiveSystems { unstable users and system are closer unobtrusive no system administrator. small, battery-powered, mobile nodes nodes often have a wireless connection ad hoc fashion a lot of sensors a lot of actuators } note right: Internet of Things is its marketing name class UbiquitousComputing { transparency is crucial continuously present unobtrusiveness is crucial user has more interaction, and more implicit actions, our system hides interfaces context awareness is any relevant information between the user and the system autonomy from human intervention artificial intelligence in an open environment } class MobileSystems { use wireless communication location change over time disruption-tolerant networks: connectivity is not guaranteed redundant communication } class SensorNetworks { ten to hundreds or thousands of small nodes with each other limited resources power consumption is critical operator's site or network datask queries askQueries(abstract query) } SensorNetworks --|> TinyDB SensorNetworks --|> SpecialNodes MobileSystems --|> GPS MobileSystems --|> CarEquipment MobileSystems --|> MANET PervasiveSystems <|-- UbiquitousComputing PervasiveSystems <|-- MobileSystems PervasiveSystems <|-- SensorNetworks PervasiveSystems *-- Mobile PervasiveSystems *-- EmbeddedDevices class HighPerfomance { stable } class ClusterComputing { homogeneity nodes = [...similar nodes (hardware and OS) closely connected in LAN] use(parallel and compute-intensive program) } ClusterComputing *-- MasterNode ClusterComputing *-- ComputeNode class GridComputing { federation of nodes in form the virtual organization = [...different nodes (hardware, OS, and networks)] worldwide } GridComputing *-- Applications Applications --> CollectiveLayer CollectiveLayer --> ConnectivityLayer CollectiveLayer --> ResourceLayer ConnectivityLayer --> FabricLayer ResourceLayer --> FabricLayer class CloudComputing { nodes = [...outsource()] vendors = [Google, Amazon, Azure] utility computing virtualized resources outsource(): grid nodes provide facilities() } CloudComputing -->"Software-as-a-service (Saas)" Applications Applications -->"Platform-as-a-service (Paas)" Platforms Platforms -->"Infrastructure-as-service (IaaS)" Infrastructure Infrastructure --> Hardware DS <|-- HighPerfomance DS <|-- DistributedInformationSystems DS <|-- PervasiveSystems HighPerfomance <|-- GridComputing HighPerfomance <|-- ClusterComputing GridComputing <|-- CloudComputing note right: Why? Because it is easy for users to access, share, collaborate, and exchange remote resources. class Communication class "Synchronous communication" as sc class "Asynchronous communication" as asc class "Batch-processing system" as batch class "Stream system" as stream class "Parallel work" as parallel batch --> asc parallel --> asc ComputerSystem <|--"autonomous" DS DS <|-- GoodDS Communication <|-- sc Communication <|-- asc Node <|-- "Computer 1" Node <|-- "Computer 2" Node <|-- "Computer 3" Node <|-- "Computer 4" DS *-- Node: Nodes operate as a whole the same way, no matter where, when, and how. User -right--> "single coherent system" DS Node --> Communication Communication --> Node Node --> batch Node --> stream Node --> parallel GridComputing ..|> MOSIX DS .|> ContentDeliveryNetwork ComputerSystem <|-- DecentralizedSystem class DecentralizedSystem{ resources are necessarily spread aa cross the network. } DecentralizedSystem ..|> Blockchain @enduml

FAQ

Worked examples

Concurrent and Parallel Principles

Parallel and concurrent process

Primitives of concurrent programs

CPU-bound operations and IO-bound operations

Coroutines make sense for IO-bound operations because X. On the another hand, threads make sense for CPU-bound operations. Process makes sense when you would like to implement the Actor Model.

Threads

Kernel Threads and User threads are suitable for CPU-bound tasks.

Processes and Actor model

Coroutines

Futures, promises and the async/await pattern

Cooperative multitasking

Yield

Event driven and callbacks

Reactive programming

Asynchronous and synchronous Programming

Concurrency

- Synchronic operation

- Asynchronous operation

- Isochronous

Parallelism

- Load Balancing

- Load Shedding

Deadlocks

Reactive and Deliberative Systems

Logging and Distributed Management

Push-Pull vs Subscribe

Dealing with Time

Reactive programming

Functional reactive programming

Throughput

Overlay Network

Latency

Shared Memory vs Message Passing

Async/await, Futures and promises, Coroutines, Channels

Idempotent

Formally, we said the to be idempotent if for all .

For example, suppose the operation consults the money in my bank account you could ask for it a lot of times but always you have the same amount, so it is idempotent. However, transfer $3145 from my bank account is not idempotent because you’re changing the state.

| Model | Properties | Example |

| Multi-threading | Parallelism, blocking system calls | Worker in the browsers |

| Single-threaded process | No parallelism, blocking system calls | Typical synchronous function |

| Finite-state machine | Parallelism, nonblocking system calls, Asynchronous operation | Event loop in the browser. |

Starvation

This refers to a situation in which a task is prevented from accessing a resource that it needs, due to the actions of other tasks.

Synchronization

Dining philosophers problem

Fork–join model

Producer–consumer problem

Readers–writers problem

Mutual exclusion, locks or mutex

Race conditions

Semaphore

Global interpreter lock

Green thread

Giant lock

Symmetric multiprocessing

Distributed Computing Paradigms

https://arxiv.org/pdf/1311.3070.pdf

Cloud

Grid

Cluster

SCOOP (Scalable COncurrent Operations in Python)

Threads, workers, processes, tasks, jobs

Concurrency patterns

https://en.wikipedia.org/wiki/Concurrency_pattern

Active Object

Balking pattern

Barrier

Double-checked locking

Guarded suspension

Leaders/followers pattern

Monitor Object

Nuclear reaction

Reactor pattern

Read write lock pattern

Scheduler pattern

Pool pattern

Given a problem that needs workers (or a pool) and one shared resource, we have two approaches based on either Queue or Mutex.

import concurrent.futures

import os

def f(x):

time.sleep(random.randint(1,5))

if random.randint(1,2) == 2:

raise Exception("Oops! Something goes wrong")

return x

N = 10

with concurrent.futures.ThreadPoolExecutor(max_workers=os.cpu_count()) as executor:

future_to_value = {executor.submit(f, f'example.com/{i}'): i for i in range(0,N)}

for future in concurrent.futures.as_completed(future_to_value):

i = future_to_value[future]

index += 1

print(f"{index*100/N:.2f}% ",end="\r")

try:

data = future.result()

# We have shared memory that changes by new data coming

print(data)

except Exception as exc:

print('%r generated an exception: %s' % (i, exc))

from joblib import Parallel, delayed

import time

import time

from joblib import Parallel, delayed

from joblib import Memory

import numpy as np

def f(x):

try:

time.sleep(1)

if x == 1:

raise Exception("1")

return x

except Exception as e:

return Exception(f"{x} raises an error: {e}")

costly_compute_cached = Memory(location='./checkpoints3', verbose=0).cache(f)

shared_memory = []

for result in Parallel(n_jobs=-1, return_as='generator', verbose=1)(delayed(costly_compute_cached)(x) for x in range(10)):

if isinstance(result,Exception):

print(result)

else:

shared_memory.append(result)

shared_memory

var winner = new Object(){ int winner = 0; };

BiFunction<AtomicInteger, Integer, Runnable> worker = (distance, index) -> () -> {

while (true) {

// perfom operations with no shared resources

distance.addAndGet(new Random().nextInt(9) + 1);

/* Perform operations with shared resources.

These operations must be synchronized.

The operating system chooses the next worker.

The rest that need this resource are put to sleep.

*/

synchronized (winner) {

if (winner.winner != 0) {

break;

}

if (distance.get() >= 1000) {

winner.winner = index;

}

System.out.println(winner.winner);

}

}

};

let winner = 0;

const sleep = (time) => new Promise(r => setTimeout(r , time));

const horse = async (index, distance=0) => {

while (winner === 0) {

console.log(index, distance, winner);

await sleep(randint(1,20));

distance = distance + randint(15,20);

winner = distance >= 100 ? index : winner;

}

console.log(index, distance, winner);

return index;

}

Promise.race(Immutable.Range(1,10).map(x => horse(x)))Thread-local storage

Case of study. Unix and Bash.

Case of study. JavaScript.

Event loop.

Stack, microtasks, macrotasks, promises, callbacks, html elements, and custom events, subscribers, streams

Case of study. Python.

Coroutines

Threads

Multiprocessing library

JobLib

Luigi

Dask

Ray

Case of study. Go.

Coroutines

Case of study. C and C++.

Case of study. Java.

https://replit.com/@sanchezcarlosjr/distributed-systems-2023-1

Case of study. Haskell.

We classify programming languages as stateful and stateless to abstract the concurrent and parallel principles, even though people have named the in different ways over the years. Stateful languages are those that encourage us to assign variables, on another hand, stateless languages are those that don’t encourage us to assign variables. JavaScript, Go, and C is stateful languages meanwhile Haskell and Erlang are stateless. Immutability. Mutability.

Semigroups and monoids

Group theory in cryptography

Case of study. Erlang and Elixir.

https://www.youtube.com/watch?v=cLno3Wf720c&ab_channel=rvirding

Case of study. Scala.

Case of study. Blockchain smart contracts.

Coroutines

Foundations

Timed Input/Output Automaton (TIOA)

https://github.com/online-ml/river

Distributed algorithms

Models

https://en.wikipedia.org/wiki/Concurrency_(computer_science)

- The actor model

- Computational bridging models such as the bulk synchronous parallel (BSP) model

- Tuple spaces, e.g., Linda

- TLA+

TLA+ Language

PlusCal

https://lamport.azurewebsites.net/tla/book.html

Process calculus

Process Algebra

Algebraic Theory of Processes

π-calculus

References

Pierce, Benjamin (1996-12-21). "Foundational Calculi for Programming Languages". The Computer Science and Engineering Handbook. CRC Press. pp. 2190–2207. ISBN 0-8493-2909-4.

MIT OpenCourseWare. (2023, January 27). MIT OpenCourseWare. Retrieved from https://ocw.mit.edu/courses/6-046j-design-and-analysis-of-algorithms-spring-2015/resources/lecture-20-asynchronous-distributed-algorithms-shortest-paths-spanning-trees

http://profesores.elo.utfsm.cl/~agv//elo330/

Architectures

Architectural styles

Architectural styles have two main views the software and hardware organization such that the style are a set of components connected to each other and how these are configured into a system. A component is a replaceable modular unit with interfaces [OMG, 2004b] where “replaceable” means during that system operation you can change the implementation or interface to another one, but you must consider changing interfaces is a pain in the ass. You connect them by “connector”, a mechanism that mediates communication, coordination, or cooperation among them. Some of them are remote procedure calls, message passing, or streaming data.

The needs of architectural styles depends on the style of data processing, these distinguish three different of systems based on data:

- Client/Server system. The client sends a query o request, and server may process the data and get back a response or result. Another way to think of, the client calls a remote procedure that lives on the server through an application protocol (e.g. HTTP). Some semantics must be accorded to deal with asynchronous, payload formats and locate entities. MV* software architectures fits well with client/server systems.

- Batch processing systems, also known as offline systems, take a large dataset, run a job to process it, and produce some output data. Once the user submits the job, no further interaction is required, as these jobs typically take a while to finish. This is the characteristic feature of an offline system. However, jobs are often scheduled to run periodically. For example, users can set up cron jobs to run once a month for tasks such as saving backups, training machine learning models, or performing bulk operations on digital images.

- Stream processing systems, also known as near-real-time systems, operates on events shortly after they happen. isochronous

Data stream, Spark streaming

Now, we focus on software architecture, that is, the logical organization into software components of which the most important architectural styles are layered architecture, object-based architecture, and resource-centered architecture.

MVC is type of client/server system.

https://www.youtube.com/watch?v=nH4qjmP2KEE&ab_channel=ByteByteGo

Application layering

Offline-first software

https://twitter.com/paulgb/status/1707101873252057481

https://twitter.com/paulgb/status/1707410383567200734/photo/1

Object-based and service-oriented architectures

Microservices

Microservices

@startuml component Service1 { node Machine1 node Machine2 node Machine3 interface interface1 folder code1 database service1 } component Service2 { node Machine4 node Machine5 node Machine6 folder code2 interface interface2 database service2 } component Service3 { node Machine7 node Machine8 node Machine9 folder code3 interface interface3 database service3 } component SomeFrontOffice<<Mashup>> { interface UserInterface } note right: For example, a Web app component AnotherFrontEnd { interface UserInterface2 } note right: For example, a Mobile app or Desktop cloud CloudVendor actor "User" UserInterface --> interface1 UserInterface --> interface2 UserInterface --> interface3 UserInterface --> CloudVendor User --> UserInterface User --> UserInterface2 UserInterface2 --> interface1 @enduml

Resources-based architectures

Middleware organization

System architecture

Decentralized organizations

P2P systems

Overlay network

Bootstrapping node

Example

Batch processing

A job or batch refers to a process that is executed offline, meaning it requires no user interaction, but it will run under certain conditions or events. We execute jobs when we need to handle a large amount of data for the task at hand. For instance, typical batch operations include DataOps tasks, data governance, and machine learning training, which ingest large amounts of data for downstream consumption.

The hardware matters with you do Deep Learning, namely, you should choose wisely CPU, GPU, TPU.

Google Map-Reduce → Hadooop → Spark

Data engineering. v. DataOps.

Kafka, Flink, Spark, Pulsar, etc

Amazon Kinesis, Google Cloud Pub/Sub, Google Cloud Dataflow

Workflow orchestrators and Scheduler

Pipe

Apache AirFlow

Luigi

Apache Oozie

Conductor

https://www.youtube.com/watch?v=MF5OaQEOF2E

Cron Job

Queue architecture

When you prefer a simple approach, a queue architecture can be a good fit. Either a user or another job can dispatch a job to a queue, which could be managed by Redis, Beanstalkd, Amazon's Simple Queue Service (SQS), or even a database table. Meanwhile, your application employs a queue worker, which processes and filters jobs in the background based on certain criteria such as priority. The queue worker can be a new thread, a new process, or an event if you're managing an event loop in a single-thread language.

Quees with delays.

Map-reduce algorithm

Pipe-filter architecture

Unix philosophy, HDFS (Hadoop Distributed File System). All-reduce algorithm. The two main problems that bash processsing programmers need to solve are: Partitioning and fault tolerance. Collective operation. GFS.

Message Passing Interface (MPI).

Stream processing

Event-driven architecture

Event loop

Micro task and Macro task

Callbacks

Async

Event Queue

Inbox and outbox pattern

Design Patterns

Design Patterns for Cloud Native Applications by Kasun Indrasiri, Sriskandarajah Suhothayan

System design

| Property | Blackboard.pdf | 2019-07-22 11:49 | 139K | 1 | Files |

|---|---|---|---|---|---|

| Untitled | ClientServer.pdf | 128K | |||

| Untitled | Command.pdf | 120K | |||

| Untitled | Composite.pdf | 93K | |||

| Untitled | Decorator.pdf | 110K | |||

| Untitled | Event.pdf | 89K | |||

| Untitled | Facade.pdf | 112K | |||

| Untitled | Layered.pdf | 146K | |||

| Untitled | MobileCode.pdf | 68K | |||

| Untitled | Observer.pdf | 148K | |||

| Untitled | Peer2Peer.pdf | 137K | |||

| Untitled | PipeFilter.pdf | 77K | |||

| Untitled | PlugIn.pdf | 182K | |||

| Untitled | Proxy.pdf | 129K | |||

| Untitled | PublishSubscribe.pdf | 150K | |||

| Untitled | State.pdf | 101K | |||

| Untitled | Strategy.pdf | 144K | |||

| Untitled | Visitor.pdf | 192K |

Algorithms

Case of study

Astrolabe

Robbert Van Renesse, Kenneth P. Birman, and Werner Vogels. 2003. Astrolabe: A robust and scalable technology for distributed system monitoring, management, and data mining. ACM Trans. Comput. Syst. 21, 2 (May 2003), 164–206. https://doi.org/10.1145/762483.762485

Globule

Globule – Scalable Web application hosting. (2023, February 09). Retrieved from http://www.globule.org

Jade

Jade Site | Java Agent DEvelopment Framework. (2023, February 09). Retrieved from https://jade.tilab.com

https://dxos.org/ provides developers with everything they need to build real-time, collaborative apps which run entirely on the client, and communicate peer-to-peer, without servers.

WebSocket, WebRTC, SFU (MediaSoup), SIP (Kamailio), RTP

Summary

Code

@startmindmap !theme superhero * Architectures ** What it is? *** Software and hardware components, their organization, and connection. *** Component is a modular unit with an interface replaceable. **** The interface ought to be replaceable in runtime. *** Connection is a set of mechanisms that coordinates components. ** Why? *** Because it is a universal principle subdividing a complex system into different layers and components. ** Who is a system designer or architect? and what does? *** She or he chooses, organizes and implements key **** Protocols **** Interfaces **** Services **** States (e.g. databases) **** Components **** Orchestration ** Architectural styles *** Layered architectures **** What? ***** Components on a level or layer i call lower-level layers j, that is, j < i ***** It can be async or sync communication ***** Monolith ***** Vertical distribution **** Why? ***** It is the most popular architecture. ***** Simple to develop. ***** Simple to deploy. ***** Simple testing. **** Challenges ***** Strong dependency among layers ***** Hard to scale ***** Redeploy the entire **** Whether it is? ***** Communication-protocol stacks ****** Standard Protocols with different implementations ***** The Web ***** Application layering ****** Multitiered architectures ****** Simple client-server architecture. ******* Request-reply behavior ***** Sun Microsystem’s Network File System (NFS) ****** Traditional MVC ******* User-interface level or views ******* Processing level or controllers ******* Models or data level *** Service-oriented architectures **** What? ***** Organization into separate, independent entities that encapsulates a service. ***** independent processes ***** independent threads **** Why? ***** Loose dependency among services **** Whether it is? ***** Services ***** Objects ****** OOP approach based on network, that is distributed objects and proxies. ****** Technologies ******* SOAP ******* RPC ******* CORBA ***** Microservices ****** What is it? ******* A lot of small, mutually independent, and encapsulated services. ******** Size does matter! ******* Each of them runs on a separate network and process. ******* Modularization is key ****** Why? ******* Better scaling ******* New technologies ******* Frequent releases ****** Challenges ******* Slower deployment ******* Service discovery ******* Complex configs ****** Technologies ******* Docker ******* Kubernetes ******* Openshift ******* Istio ***** Coarse-grained service composition ****** What is it? ******* A kind of microservice architecture ******** But some services may not belong to the same organization ****** Why? ******* Our organization serves a Service composition based on a cloud provider. ****** Challenges ******* Service harmony ****** Whether it is? ******* Cloud computing ***** Resource-based architectures ****** What is it? ******* A collection of resources managed by remote components by CRUD operations ****** Whether it is? ******* GraphQL ******** Queries and Mutations ******** Types ******* Representational State Transfer (REST) ******** PUT, POST, GET, DELETE ******** Users identify resources by a single naming scheme ******** Services offer the same interface as the four operations ******** Messages are fully self-described ******** Stateless execution, that is, after an operation, the component forgets the caller. ******** Based on HTTP *** Publish-subscribe architectures **** What is it? ***** Strong separation between processing and coordination ***** The system is a collection of autonomous processes. ***** Temporal dimension ***** Processes must be running at the same time. ***** Referential dimension ****** Exists reference between processes such as name or an identifier **** Whether it is? ***** Topic-based publish-subscribe systems ***** Content-based publish-subscribe systems ***** Event bus systems ***** RSS ***** Mailbox ***** Shared data space ****** Linda (coordination language) ****** Qlib-Server ***** Map-Reducers schemes ****** GFS ****** Hadoop ***** Reactive schemes ****** RXJS ***** Stream schemes ****** Cell phones ** Middleware *** Goal **** Achieve the open-close principle *** Design patterns **** Wrappers or Adapters ***** What it is? ****** Special component that offers an acceptable interface. ****** It solves the problem of incompatible interfaces. **** Why? ***** Allow the construction of adaptive software. ***** Whether it is? ****** A broker that centralizes wrappers. O(n) wrappers. ****** A wrapper by each client. O(n^2) wrappers. **** Interceptors ***** What is it? ****** A software that breaks the flows and executes another software. ***** Whether it is? ****** Proxy JavaScript ** Symmetrically distributed architectures *** What is? **** Horizontal distribution **** Peer is a client and server at the same time, that is, a servant. *** Structured peer-to-peer systems **** Attempt to maintain a specific, deterministic overlay network. **** Distributed hash tables and hypercubes *** Unstructured peer-to-peer systems **** Each node maintains an ad hoc list of neighbors. **** Random graph ***** Flooding ***** Random walks *** Hierarchically organized peer-to-peer networks **** Supernodes and leader election problem **** Whether it is? ***** BitTorrent ** Hybrid system architectures *** Cloud computing **** Outsourcing local computing ***** Contrary to on-promise services **** Service-level agreements ***** Hardware ***** Infrastructure (IaaS) ***** Platform (PaaS) ***** Function (FaaS) ***** Application (SaaS) *** The edge-cloud architecture **** Internet-of-Things (IoT) *** Blockchain architecture **** The principle operation of a blockchain ***** A node broadcasts a transaction ***** A validator collects transactions into a block ***** A single validated block is broadcast of all the nodes @endmindmap

Worked examples

Processes

Threads in distributed systems

The most important thread property is allowing blocking system calls but not blocking the entire process in which the thread is running because it makes it much easier to express multiple logical connections at the same time.

Virtualization

Examples are Docker, Virtual Machines, and Emulators.

OS

Docker,OpenVZ/Virtuozzo,and LXC/LXD.

Clients

If you must establish a high degree of distribution transparency on the client side, that is, you should hide the communication latencies, then the most common is to initiate the communication right off the bat, display something quickly to users, and not block the user interface. All together can be done via threads.

Replicated calls from a client

A replicate call is a remote procedure call (RPC) between a node and the replicas, that is, the other nodes in the system that holds a copy of the data or chunk being requested, where a response may or may not be received due to system design or network problems.

Typically, a replicated call refers to a situation where multiple replicas of a server-side application are involved in processing a client's request.

Code

@startuml title Replicated Calls actor Client entity "Proxy, request-level interceptor" as Proxy database Replica1 database Replica2 database Replica3 note over Replica1, Replica2: A replica is a node that holds a copy of data or a chunk of it. Client->Proxy: B.request(query,data) // read or write the data group n replicated calls Proxy->Replica1: Forward request Proxy->Replica2: Forward request Proxy->Replica3: Forward request end Replica1->Proxy: Send response Replica2->Proxy: Send response Replica2->Proxy: Send response Proxy->Client: It applies a pipe() to responses, for example, it could send either the first response, or the last response, or combines all responses if you need agreement or chunks from replicas. @enduml

Web browser

Servers

Window X

Window X designates a user’s terminal as hosting the server, while the application is known as the client. It makes sense because on one hand there is a limited resource and $n$ applications wanting to access them, so the Windows X server coordinates the clients and resource interruptions.

Proxies

Reverse proxy

traefik, ngrok, …

Cache

An alternative solution to Caching stratagies

Filters are probabilistic data structures

Code migration

Webhook

Cache

Checkpoint and restore

Actor model

https://arxiv.org/pdf/1008.1459.pdf

Queue system analysis

https://www.youtube.com/watch?v=z611FLLR3_8&ab_channel=GeoffreyMessier

https://www.youtube.com/watch?v=Hda5tMrLJqc&ab_channel=USENIX

https://www.youtube.com/watch?v=oQGreeij-OE&ab_channel=8thLightInc.

Job scheduler

Summary

Code

@startmindmap * Processes ** What is it? *** A program in execution ** How can we develop cooperative programs? *** IPC ** Important advantage over threads *** Processes don't share the data space ** Threads *** Why? **** We require hiding blocking operations **** We must exploit a multicore system **** Threads offer easier communication to each other and performance than process *** When the operating system execute them? **** Context Switching **** Thread Switching **** User space *** Model **** 1 process: N user-space threads: M kernel space threads: U cores ** Virtualization *** What? **** A proxy that mimics the behavior of another system interface ***** Instruction set architecture (ISA) ***** System calls ***** Application programming interface (API) ***** The software environment *** Why? **** Portability ***** Isolation ***** You're decoupling hardware and software ***** Reproducible **** Maintain legacy projects **** Flexibility to change underlying systems *** Whether it is? **** A process virtual machine ***** Runtime system ****** Java ***** Emulation ****** Wine Linux **** A native virtual machine monitor **** A hosted virtual machine monitor **** Containers ***** Unix mechanisms ****** chroot ****** namespaces ****** union file system ****** control groups ***** Docker+Kubernetes ***** Linux containers (LXC) ***** PlanetLab (Vservers) **** Nix ** Client architecture *** Networked user interfaces **** Users interact with remote servers ***** by a specific protocol ***** by an independent protocol **** Whether it is? ***** X Window System *** Virtual desktop environment **** The user has software to access the virtual desktop environment **** Whether it is? ***** Chrome OS ***** Web Browser *** Client-side software for distribution transparency ** Server architecture *** It waits for incoming requests and takes care of them. *** General design issues **** Synchronization ***** Iterative or sync ***** Concurrent or async **** Contacting **** Interrupting **** State ***** Stateless ***** Stateful *** Object servers **** The server provides an interface to invoke local objects ***** Activation policies ***** Mechanism, Object adapter **** Whether it is? ***** The Ice Runtime system ***** The Apache Web Server *** Server clusters **** A set of machines connected through a network ** Code migration *** An process is moved from one node to another. *** Reasons **** Performance **** Privacy and security **** Flexibility *** Models **** Sender-initiated migration **** Receiver-initiated migration *** Migration in heterogeneous systems **** Process virtual machine @endmindmap

Exercises

Communication

Protocols

Routing algorithms

Deadlock-free packet switching

Wave and Traversal Algorithms

Election algorithms

Termination detection

Anonymous Networks

Snapshots

Sense of direction and orientation

DNS

Synchrony in networks

Remote procedure call (RPC)

A remote procedure call is a transparent function or method call that internally sends and receives data from remote processes. It must hide the message-passing nature of network communication. You can find a lot of RPC examples such as REST, SOAP, gRPC, Ajax in Web Browsers, graphQL, CORBA, and Trihft.

Webhook

Message-oriented communication

Multicast communication

Worked examples

Summary

@startmindmap

!theme materia

* Communication

** OSI Model

*** What is it?

**** Communication protocols

***** Application, Presentation, Session, Transport, Network, Data link, Physical

***** Message = payload + header for each protocol

**** Middleware protocols

***** What is it?

****** General-purpose protocols that warrant their own layer

***** Examples

****** DNS

****** Authentication and authorization protocols

*** Why?

**** It allows thinking about open systems to communicate with each other

*** Examples

**** Connectionless services

***** Email

**** Connection-oriented communication

***** Telephone

** Remote procedure call

*** Why?

**** Achieve transparency

*** What is it?

**** The real implementation is executed in another node over a network

*** Examples

**** API REST

**** GraphQL

**** gRPC

**** CORBA

**** Ajax in browsers

*** How?

**** Local stub communicates with the server via passing messages

**** Transport Procedure Call

**** Transparent calls

**** Marshaling and Serialization

**** Interface Definition Language (IDL)

***** Issue

****** Code migration and references

** Message-oriented communication

*** Issues

**** Does the communication persist?

**** Is the communication synchronous?

*** How?

**** Async communication

**** Sync communication

**** Technologies

***** Socket interfaces

***** Blocking calls

*** Solution

**** Design Patterns

***** Pipeline pattern

***** Publish-subscribe pattern

***** Request-reply pattern

***** Message-queuing systems

***** Message brokers

**** Message-oriented middleware

***** When?

****** When the RPCs are not appropriate.

***** Examples

****** MQTT

****** ZeroMQ

****** The Message-Passing Interface (MPI)

** Multicast comunication

*** What is it?

**** Disseminate information across multiple subscribers.

**** Examples

***** IPFS

*** How?

**** Peer-to-peer system

***** Structured overlay network

***** Every peer sets up a tree

***** Distributed hash table (DHT), Distributed data

**** Flooding messages across the network

**** Epidemic protocols

***** Simple

***** Robust

@endmindmapNaming

Should be the naming either descentralized or centralized?

Flat naming

Structured naming

Attribute-based naming

Worked examples

Summary

Code

mindmap root((Naming)) (Names) What? It identifies entities Whether it is? Processes Users Network connections How? Naming system ::icon(fa fa-ear-listen) Access point or address Location independent ::icon(fa fa-location-dot) (Flat naming) What? Names are random bit strings Good for machines How? Broadcasting Multicasting Forwarding pointers (Home-based approaches) Distributed hash tables Exploting network proximity Hierachical approaches Secure flat naming (Structured naming) What? Good for humans Naming systems How? Name spaces ::icon(fa fa-diagram-project) Name resolution Closure mechanism Linking and mounting Name space distribution Whether it is? DNS (Attribute-based naming) What? Search based on a description ("(Attribute, value)") How? Directory services Decentralzied implementations (Space-filling curves) Whether it is? Resource Description Framework Lightweight Directory Acess Protocol Mermaid (Named-data networking) What? (Information-centric networking) Get a copy to access it locally How? New routing

Coordination

Clock synchronization

Summary

Code

mindmap root((Coordination)) [Clock synchronization] What? It's doing the right thing at the right time Why? Data synchronization Process synchronization Time is so basic to the way people think Examples File timestamps Financial brokerage Security auditing Collaborative sensing Real time systems [Physical clocks] What? A computer timer made by machined quartz crystal Counter, Holding and Clock Ticks CMOS RAM Issues Leap second Clock skew Examples Coordinated Universal Time Exchaning clock values [Logical clocks] What? It's unnecessary knowing the absolute time, but Related events at different procceses happen in the correct order How? Each event e, is assigned an unique timestamp C e Examples Lamport clocks Vector tunestano [Mutual exclusion] What? At most one process at a time has access to a shared resource. How? It can be achieved easily with a coordinator. A fully distributed algorithms exist, but it can go wrong. [Election algorithms] What? Election algorithm choose a new leader in a DS. Why? If the coordinator is not fixed, it is necessary choose a coordinator. When the coordinator crash, you must choose a new leader. [Gossip-based coordination] What? Coordinate a peer to peer system. How? Choose another peer randomly from an entire overlay. Partial view is refreshed regularly and randomly. Examples Routing Tribler [Distributed event matching] What? To route notifications to proper subscribers. How? One to one comparisons by topic Content based matching Examples Publish subscribe systems [Location systems] What? Take proximity into account Examples Global Positioning System Logical positioning of nodes Network coordinate system Wigle database Centralized positioning Decentralized positioning

Worked example

Process 1 and Process 2 behave same, however, it depends both are fullfilled and they ocurrs in different order. Let S0,S1,S2 those states that are in we definite transitions the global state of system as follow:

P1 P2 P1 P2

[S0,S1] -> [S1,S2]

[S1,S2] -> [S2,S0]

[S2,S0] -> [S0,S1]

const State = {

RED: 0,

YELLOW: 1,

GREEN: 2

}

class Process {

constructor(state, name) {

this.state = state;

this.name = name;

}

transit() {

this.state = (this.state+1)%3;

}

commit() {

let time = match(this.state)

.with(State.RED,() => 3000)

.with(State.YELLOW, () => 500)

.with(State.GREEN, () => 200)

.exhaustive(_ => 5000);

return sleep(time);

}

}

class Coordinator {

constructor() {

this.process = [

new Process(State.GREEN, "A"),

new Process(State.RED, "B"),

new Process(State.RED, "C"),

new Process(State.RED, "D")

];

}

async start() {

let consumers = this.process;

while(true) {

console.log([...this.process].sort((a,b) => a.name.charCodeAt()-b.name.charCodeAt()).map(x => `(${x.name},${x.state})`));

await Promise.all(consumers.map(c => c.commit()));

consumers = this.transit();

}

}

transit() {

return match(this.process)

.with([{state:State.GREEN},{state: State.RED},{state:State.RED},{state:State.RED}], () => {

this.process[0].transit();

return [this.process[0]];

})

.with([{state:State.YELLOW},{state: State.RED},{state:State.RED},{state:State.RED}], () => {

this.process[0].transit();

return [this.process[0]];

})

.with([{state:State.RED},{state: State.RED},{state:State.RED},{state:State.RED}], () => {

this.process = [this.process[1], this.process[2], this.process[3], this.process[0]];

this.process[0].transit();

return [this.process[0]];

})

.exhaustive();

}

}

new Coordinator().start();

Questions

Consistency and replication

Stateless and stateful

Atomic objects

Fault tolerance

Fault Tolerance in Distributed systems

Case of study

VMware FT

Circuit Breaker Design Pattern

Questions

Fault tolerance

Single point of failure

Glitch

Fault tolerance in Asynchronous systems

Fault tolerance in Synchronous systems

Failure detection

Stabilization

Self-stabilization is a desirable property in distributed algorithms. It refers to the ability of a system to recover from illegitimate states to legitimate states without external intervention. This property can be particularly useful in systems where there may be faults, or in systems that start in an illegitimate state.

The Maximal Strongly Independent Set (MSIS) problem in graphs is a well-known NP-hard problem in graph theory and computer science. The MSIS is a subset of nodes such that no node is adjacent to all nodes in the MSIS, and there are no nodes that can be added to the independent set without violating this property.

Halin graphs are a particular class of graphs, named after the mathematician Rudolf Halin, which are constructed from a rooted planar tree by embedding a cycle through all the leaves.

A self-stabilizing algorithm for the MSIS problem in geometric Halin graphs could be developed iteratively, where the nodes of the graph update their status based on the states of their neighbors until the system reaches a stable state. A key consideration here would be ensuring that the algorithm is efficient, i.e., it converges quickly and uses a minimal amount of resources.

Process resilience

Reliable client-server communication

Distributed commit

Capacity Planning

CAP theorem

Summary

Security

Monitoring and observability

Observability is a technique used to observe the state of a system based on metrics, logs, and traces in a distributed system. On the other hand, monitoring consists of checking processes and analyzing metrics over a period of time in a monolithic. systems. Therefore, monitoring tasks are a subset of Observability tasks.

Logs

Event logs

syslogs

netflow

packet captures

firewall logs

Tools

OpenTelemetry

OpenMetrics

Nagios

Prometheus

Grafana

New relic

logz.io

Instana

Splunk

AppDynamics

Elastic

Dynatrace

Datadog

OpenCensus

Operating system journals

https://github.com/louislam/uptime-kuma

Grafapha and Prometheus

References

R. E. Kalman, “On the General Theory of Control Systems,” Proceedings of the First International Congress on Automatic Control, Butterworth, London, 1960, pp. 481-493.

Observability at Twitter https://blog.twitter.com/engineering/en_us/a/2013/observability-at-twitter, https://blog.twitter.com/engineering/en_us/a/2016/observability-at-twitter-technical-overview-part-i

Practical Monitoring by Mike Julian

The Art of Monitoring by James Turnbull

Security

Authentication and authorization

LDAP server

Vault

Auth2

Multi-tenancy

Data centers

Computer cluster

Lustre (file system)

https://www.allthingsdistributed.com/2023/07/building-and-operating-a-pretty-big-storage-system.html

Tutorials and assignments

We will explore some alternatives to perform distributed computing, both locally and in a network, starting from the most basic approaches and progressing to the use of the most feature-rich libraries.

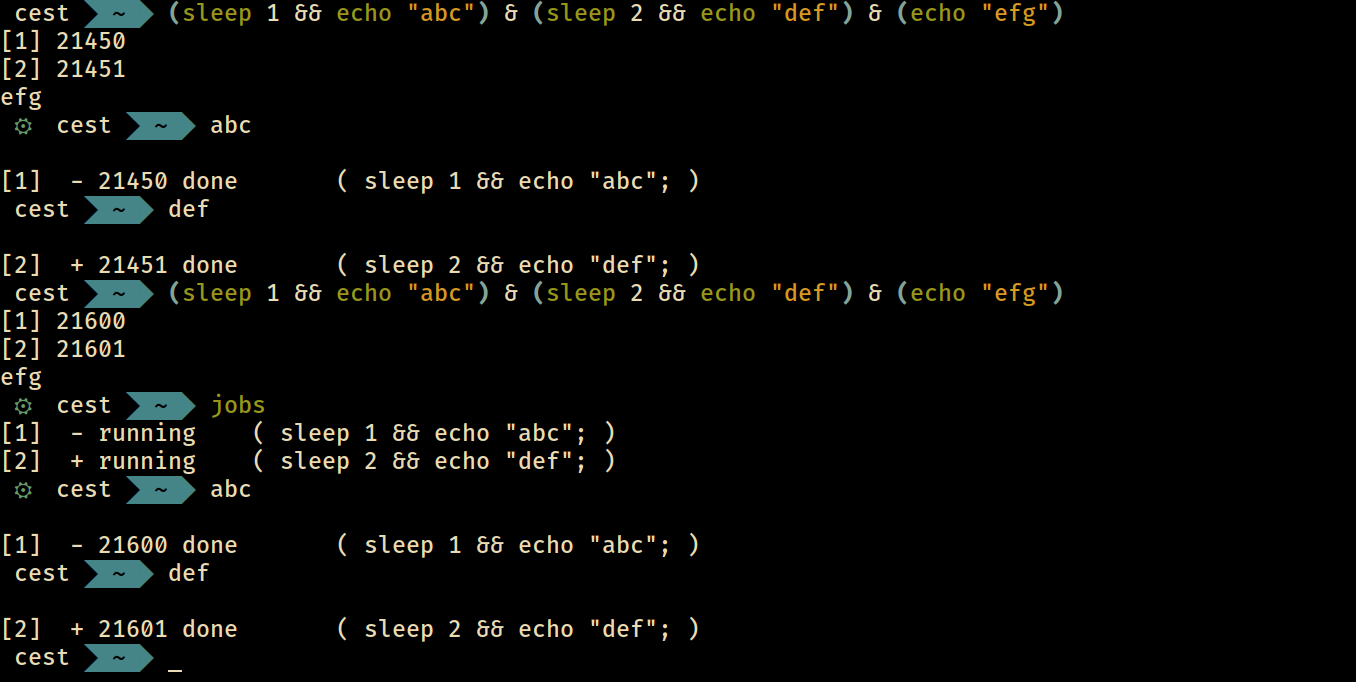

The “&” operator

(sleep 1 && echo "abc") & (sleep 2 && echo "def") & (echo "efg")

GNU parallel

In my opinion, GNU Parallel works well for complex tasks when run locally, but it can be difficult to use on a cluster. You can see for yourself using https://www.msi.umn.edu/support/faq/how-can-i-use-gnu-parallel-run-lot-commands-parallel.

Examples

cd /tmp/ && touch {1..5}.my_random_file | find . -type f -name '*.my_random_file' | parallel gzip --bestparallel echo ::: 😀 😃 😄 😁 😆 😅 😂 🤣 🥲 done!touch images.txt && parallel printf 'https://picsum.photos/200/300\\n%.0s' ::: {1..1000} | parallel -j+0 -k "echo {} | tee -a images.txt" && cat images.txt | parallel wgettouch images.txt && parallel -j+0 "printf 'https://picsum.photos/200/300\\n%.0s'" ::: {1..2} | parallel -j+0 "echo {} >> images.txt" && parallel -a images.txt -j+0 wgetxargs

cat images.txt | xargs -n 1 -P 10 curl -s | gawk '{print $1}'JobLib

Dask

Ray

On the other hand, Ray performs well for complex tasks on an on-premise cluster, but when used locally, it can be overwhelming.

A distributed Hello World program

Network File System

Hadoop and Spark

Open MPI

Low-level alternative

LibP2P

Location-addressing approach vs content addressing

Content identifier

Peer. A participant in a decentralized network that is equally privileged.

Luigi

Cloud services

Backend-as-service

Firebase

Supabase

Herok

Postgresql

Blockchain and smart contracts

Kubernetes and docker

Queue messaging

MQTT

Message broker

Quality of service

OASIS (organization)

ZeroMQ

Apache Kafka

Quality of service

Message-oriented middleware

Message queuing service

Ope

Your own map-reducer

https://pdos.csail.mit.edu/6.824/labs/lab-mr.html

Other Alternatives

Object storage

1. Network Attached Storage (NAS) - a file-level storage architecture that allows multiple users to access shared files over a network.

2. Distributed File System (DFS) - a protocol that allows users to access files on multiple servers as if they were on a single shared drive.

3. iSCSI (Internet Small Computer System Interface) - a protocol used for connecting storage devices over a network as if they were directly attached to a computer.

4. Remote Direct Memory Access (RDMA) - a protocol used for direct memory access between servers over a network, often used for high-performance computing and data-intensive applications.

6. AFS (Andrew File System) - a distributed file system designed for large-scale collaboration and data sharing built by Carnegie Mellon University

.

8. GlusterFS - a distributed file system designed for scalability and high availability, often used for cloud computing and big data applications.

9. Lustre - a parallel distributed file system designed for high-performance computing and scientific applications.

10. File Transfer Protocol (FTP) and Secure File Transfer Protocol (SFTP) - a protocol used for transferring files over a network, often used for website hosting.

version: '3'

services:

sftp:

image: atmoz/sftp

ports:

- "2222:22"

environment:

SFTP_USERS: sftpuser:pass:1001:/data

volumes:

- /path/to/sftpdata:/data11. WebNative FileSystem (WNFS)

- MinIO - an open-source, self-hosted cloud-based object storage service that can be deployed on-premises or in a cloud environment.

- S3

- Ceph - an open-source, software-defined storage system that can be used to create a distributed object store.

- OpenStack Swift - an open-source object storage system that can be used to create a scalable and highly available object-store.

- Apache Cassandra - a distributed NoSQL database system that can be used to store and retrieve large amounts of data.

- GlusterFS - an open-source, distributed file system that can be used to create a scalable and highly available object store.

- Riak KV - an open-source, distributed NoSQL database system that can be used to store and retrieve large amounts of data.

- OpenIO - an open source, object storage, and data management platform that can be used to store and retrieve large amounts of data.

- Scality Ring - an open-source, distributed storage system that can be used to create a highly available and scalable object store.

- SwiftStack - an open-source, software-defined storage system that can be used to create a distributed object store.

- Nextcloud Object Storage - an open-source, self-hosted cloud storage system that includes an object storage component that can be used to store and retrieve large amounts of data.

- SSH and RSYNC over SSH

https://hub.docker.com/r/linuxserver/openssh-server

---

version: "2.1"

services:

openssh-server:

image: lscr.io/linuxserver/openssh-server:latest

container_name: openssh-server

hostname: openssh-server #optional

environment:

- PUID=1000

- PGID=1000

- TZ=Etc/UTC

volumes:

- ./config:/config

ports:

- 2222:2222

restart: unless-stopped- Network File System

- Server Message Block (SMB) and CIFS/SMB

- BitTorrent Sync

- Atom (web standard)

- Content Management Interoperability Services

- Linked Data Platform

- Unison File Synchronizer https://github.com/bcpierce00/unison

- netcat (nc)

nc -lk 1234 < ~/sharedStorage Area Network

Simulations

Simgrid

OverSim

Electronic voting

Next steps

References

Tanenbaum, Andrew S.; Steen, Maarten van (2002). Distributed systems: principles and paradigms. Upper Saddle River, NJ: Pearson Prentice Hall. ISBN 0-13-088893-1. Archived from the original on 2020-08-12. Retrieved 2020-08-28.

6.5840 Home Page: Spring 2023. (2023, January 19). Retrieved from https://pdos.csail.mit.edu/6.824

"Distributed Programs". Texts in Computer Science. London: Springer London. 2010. pp. 373–406. doi:10.1007/978-1-84882-745-5_11. ISBN 978-1-84882-744-8. ISSN 1868-0941.

Lynch, Nancy. Distributed Algorithms. Burlington, MA: Morgan Kaufmann, 1996. ISBN:9781558603486.

Attiya, Hagit, and Jennifer Welch. Distributed Computing: Fundamentals, Simulations, and Advanced Topics. 2nd ed. New York, NY: Wiley-Interscience, 2004. ISBN: 9780471453246.

Herlihy, Maurice, and Nir Shavit. The Art of Multiprocessor Programming. Burlington, MA: Morgan Kaufmann, 2008. ISBN: 9780123705914.

Guerraoui, Rachid, and Michal Kapalka. Transactional Memory: The Theory. San Rafael, CA: Morgan and Claypool, 2010. ISBN: 9781608450114.

Dolev, Shlomi. Self-Stabilization. Cambridge, MA: MIT Press, 2000. ISBN:9780262041782.

Kaynar, Disun, Nancy Lynch, Roberto Segala, and Frits Vaandrager. The Theory of Timed I/O Automata. 2nd ed. San Rafael, CA: Morgan and Claypool, 2010. ISBN:9781608450022. https://groups.csail.mit.edu/tds/papers/Lynch/Monograph-second-edition.pdf

MIT 6.033

MIT 6.5840

MIT 6.824. (2023, January 27). MIT OpenCourseWare. Retrieved from https://ocw.mit.edu/courses/6-824-distributed-computer-systems-engineering-spring-2006

Systems, Mit 6. 824: Distributed. "Lecture 1: Introduction." YouTube, 6 Feb. 2020, www.youtube.com/watch?v=cQP8WApzIQQ&list=PLrw6a1wE39_tb2fErI4-WkMbsvGQk9_UB&ab_channel=MIT6.824%3ADistributedSystems.

Saltzer and Kaashoek's Principles of Computer System Design: An Introduction (Morgan Kaufmann

2009).

Nancy Lynch, Michael Merritt, William Weihl, and Alan Fekete. Atomic Transactions. Morgan Kaufmann Publishers, 1994.

MIT OpenCourseWare. (2023, January 27). MIT OpenCourseWare. Retrieved from https://ocw.mit.edu/courses/6-852j-distributed-algorithms-fall-2009

cs6234-16-pds https://www.comp.nus.edu.sg/~rahul/allfiles/cs6234-16-pds.pdf

Stevens, W. Richard, Bill Fenner, and Andrew M. Rudoff. UNIX Network Programming, Vol. 1: The Sockets Networking API. 3rd ed. Reading, MA: Addison-Wesley Professional, 2003. ISBN: 9780131411555.

McKusick, Marshall Kirk, Keith Bostic, Michael J. Karels, and John S. Quarterman. The Design and Implementation of the 4.4 BSD Operating System. Reading, MA: Addison-Wesley Professional, 1996. ISBN: 9780201549799.

Stevens, W. Richard, and Stephen Rago. Advanced Programming in the UNIX Environment. 2nd ed. Reading, MA: Addison-Wesley Professional, 2005. ISBN: 9780201433074.

Indrasiri, K., & Suhothayan, S. (2021). Design Patterns for Cloud Native Applications. " O'Reilly Media, Inc.".

. "Distributed Systems lecture series." YouTube, 31 Jan. 2023, www.youtube.com/playlist?list=PLeKd45zvjcDFUEv_ohr_HdUFe97RItdiB.

https://ki.pwr.edu.pl/lemiesz/info/Tel.pdf

https://www.youtube.com/@DistributedSystems

https://www.youtube.com/@lindseykuperwithasharpie

https://www.youtube.com/@rbdiwate626

IPFS and libp2p

TODO

Secure multi-party computation

proxies

reverse-proxy